Building a Scrape-Delta-Vectorize backend pipeline entirely in Supabase

An new way to build backends, is this the rebirth of Smalltalk style programming?

I’m sick of spending so much time using git. I’m sick of branching. I’m sick of merging. I’m sick to death of merge conflicts. If I never rebased once more in my entire life I’d be better for it. I spend so much time rewriting source control history it’s become almost as much of a time sink than writing the code itself. It’s the last thing I want to deal with in my personal projects.

At the same time I hate these graphical low code environments like n8n, they are slow and clunky. They make life hard for the expert and don’t give you enough productivity in return.

I just want to build software as god Alan Kay intended: Live in prod on a continuously running system that encapsulates its own state with actual code. Living dangerously.

No check-ins, no branching, no merges. Just doing it live right in prod. The closest thing you can get to this today is SQL. On a SQL database you create functions on the fly, build data tables, code up real time events that pump data around. Most support some type of Turing Complete programming language. You can even live-edit data, and it all persists through system restarts. Squint with me for a moment and consider how similar this is to Smalltalk.

The thing is, does source control really matter quite so much when you can just pop open your AI and one shot almost any function you might care to write? If you’re following the recent discourse around spec-driven programming by some of the masterminds at Microsoft you’ll know they certainly don’t think so, many think the spec is now the most important artifact not the code, and I’m inclined to agree (Can’t wait to try out the new spec language by the way).

So when I recently had a small self contained project that was entirely backend, and needed to do it on short notice, I didn’t want the overhead of any of those typical SDLC laden processes. I had been recently using supabase as a platform for a different project and it all suddenly clicked: I could just use supabase directly for the entire backend end-to-end, and it would be pretty slick, and very fast. (Really the only thing supabase is missing for 100% end to end hosting is static web site hosting, I hope they remedy this.)

The other great thing about supabase is they give free hosting for hobby projects. This makes it super easy to get going with them for new projects, and then if you want to make it “real” later, you just whip out the ol’ credit card. I promise they’re not paying me to say this, I’m just a huge fan.

Supabase gives you well supported Postgres, out of the box with a litany of other things, for this project I only cared about three: Vault (for secrets), Edge Functions (for things not so pleasant to write in SQL), and pg_cron for managing job execution.

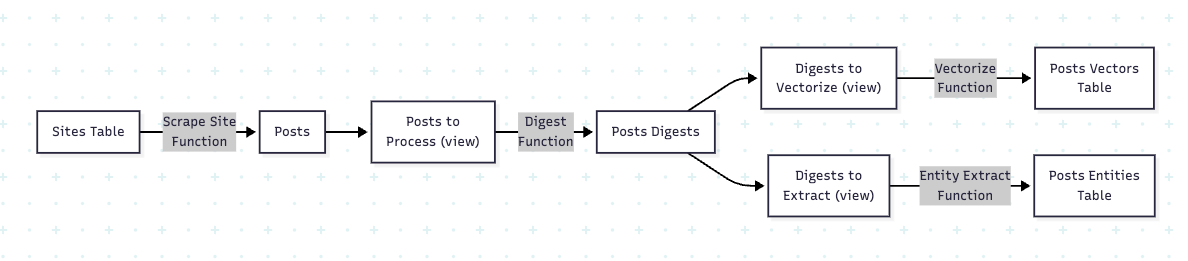

The general data flow looks something like this:

Each of the connections between the tables is a TypeScript edge function with a helper view that compares the input and output table and shares what work is yet to be done. Something like:

Input Table → What to Process View → Edge Function → Output Table

The exception is the sites table, which needs to be hit repeatedly for all entires on the list, as you want to pull down all new posts whenever they show up. So in the end having a view wasn’t helpful for that case.

The Supabase GUI has an edge function editor, so you can just generate your functions with AI, paste them right in there and click deploy, then hit the test button to test it out. It’s a lightning fast loop for writing with AI, deploying it and trying it out.

Note: the test button only shows up after you’ve deployed.

Inside edge functions you can reference web-hosted libraries, but there’s no npm e.g.

import { htmlToText } from "https://esm.sh/html-to-text@9”and you can call externals functions (and keep your api keys securely stored on the secrets tab).

With all of this it becomes very easy to iterate connections to other systems. Here I seamlessly called huggingface for multiple different models without any issue, and GPT-5 plus one shotted the edge function code almost every time.

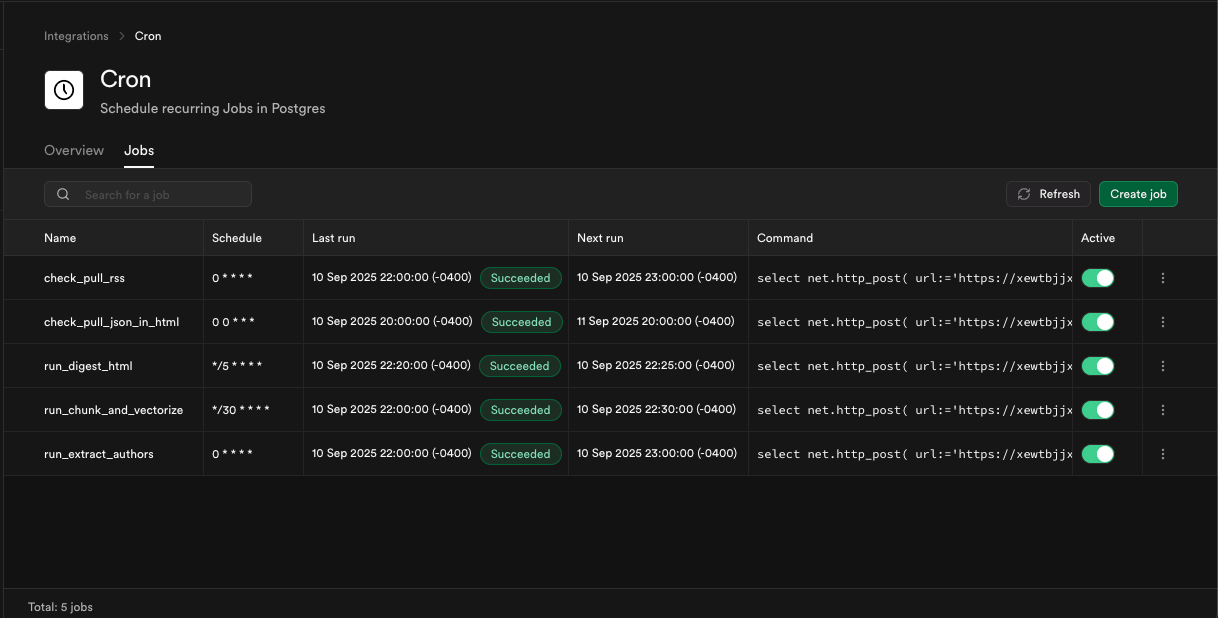

Then you layer on the pg_cron extension and set up your little pump functions to run as often as you’d like and you have a full blown data processing pipline. You could also call the edge functions on data change via webhooks, supabase has a lot of features and I won’t be covering them all in this post.

Each of those functions gets fairly nice logging and dashboarding out of the box for free too. It’s really quite nice.

Of course you can also create edge functions as APIs for your front end (or use the built in REST APIs). Supabase handles auth and secrets management for you.

There’s a version of this I haven’t tried where you would let Claude Code go ham with the supabase CLI tool, it could be quite something. Although I think it’s just as likely it would steamroll itself into oblivion with breaking changes if you didn’t have the entire database structure laid out cleanly locally for Claude to reference. You’d definitely need to come up with an approach to keep it on rails, and reversible when things go wrong.

And there you go, a RAG scrape-extraction-vectorize backend with no backend server, no git, no deployment pipelines, just supabase. Just you, GPT-5 and a very fast iteration loop. Truly is there any delivery more continuous than directly changing things in prod?

I don’t endorse building production applications in this way. I have seen first hand the carnage caused by hands-in-prod database shenanigans and the outages they cause. However, you can build prototypes very fast by leaving the SDLC at the door. This entire project was less than one day of work, around six hours including research and setting up my accounts etc.

If supabase added some dropbox style “just keep each deployed version” rudimentary source control, along with something similar for SQL changes? Well then yeah this approach would be fantastic for all kinds of small work projects. I hope they do, and I hope they add static web hosting so I don’t have to use an external provider to host my static files.

If you’re interested let me know and I’ll write more on this topic. Supabase has a great many features to explore with the operating on a live system mindset.